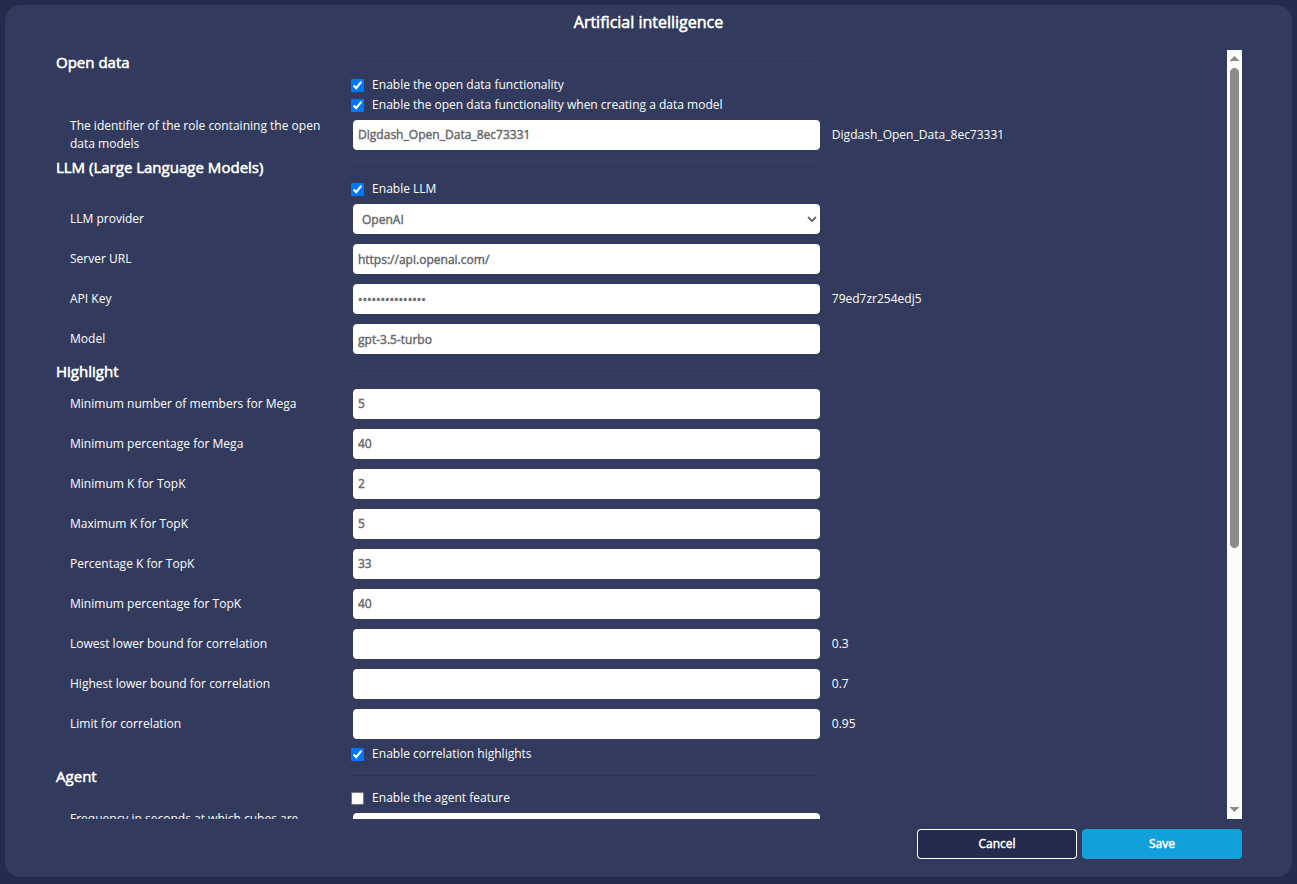

Artificial intelligence

This section allows you to configure the options for features using artificial intelligence: data enrichment with open data, generation of a transformation function, display of highlights and DigDash Agent.

Open data

Here you can activate the open data functionality:

- Check the Enable the open data functionality box.

➡ The Enrich with open data command is then available in the data model context menu.

It is also possible to automatically detect whether open data compatible with your data is available, when your data model is created. To do this:

- Check the Enable open data functionality when creating a data model box .

➡ If open data compatible with your data is available, it will be offered to you when you create your data model (after clicking on the Finish button when configuring the data model in the Studio).

The field The identifier of the role containing the open data models contains the identifier of the role dedicated to Digdash Open Data models.

LLM (Large Language Model)

Here you can activate and specify the LLM (large language model) used by the AI wizard for the generation of transformation functions.

- Tick the Activate LLM box.

- Select the LLM Provider from the drop-down list.

- Fill in the following information:

OpenAI Google Gemini Ollama Server URL https://api.openai.com https://generativelanguage.googleapis.com/v1beta/models/nom_du_modèle

The URL contains the model used by Gemini. Thus, model_name should be replaced by the name of the chosen model. For example:

https://generativelanguage.googleapis.com/v1beta/models/gemini-1.5-flash-latest:generateContentClick on the following link to consult the list of available models:

https://ai.google.dev/gemini-api/docs/models/gemini?hl=frEnter the server URL in the following format:

http://[server]:[port]

For example : http://lab1234.lan.digdash.com:11434API key Enter your API key.

See the paragraph Configuring an OpenAI API key if necessary.

Enter your API key.

See the paragraph Configuring a Gemini API key if necessary.

Ollama does not require an API key. Model Enter the name of the chosen model.

For example, gpt-3.5-turbo.Click on the following link to consult the list of available models:

https://platform.openai.com/docs/modelsThe model is not entered here but directly in the server URL.

The field must remain empty.Enter the model identifier.

We recommend the following models:

- Codestral 22B: LLM specialising in code generation (small, high-performance model).

With the level of quantization level Q4_K_M, the identifier is codestral:22b-v0.1-q4_K_M - Llama 3.3 70B : General-purpose LLM for code-generating tasks.

With the quantization level Q4_K_M, the identifier is llama3.3:70b-instruct-q4_K_M

Click on the following link to consult the list of available models:

https://ollama.com/search - Codestral 22B: LLM specialising in code generation (small, high-performance model).

- Click on Save.

To return to the default values, click Reset.

Setting prompts

The prompts (or instructions) used by default for generating data transformations are stored in the /home/digdash/webapps/ddenterpriseapi/WEB-INF/classes/resources/llm directory . There is a prompt for each provider. ❗These prompts MUST NOT be changed.

You can define a custom prompt on the same model, keeping the last lines :

request: #/*REQUEST_CLIENT*/#

In order to be taken into account, it must be named custom.prompt and placed in the /home/digdash/appdata/default/Enterprise Server/ddenterpriseapi/config directory .

Highlights

💡 Consult the page View highlights page for more details on the use of highlights.

Some default values for highlights parameters ars eset by default. You can change these values if you wish to influence the way in which highlights are identified.

| Parameter | Description |

|---|---|

| Minimum number of members for Mega | Minimum number of members the dimension must contain to be able to identify a mega contributor. |

| Minimum percentage for Mega | Minimum percentage of the total sum that the member must represent to be a mega contributor. By default, a member must contribute at least 40% of a given measure to be able to be a mega contributor. |

| Minimum number K for TopK | Minimum number of members contributing at least the"Minimum percentage for TopK" to a given measure (total sum). By default, at least 2 members must contribute at least 40% of a given measure to be top contributors. |

| Maximum number K for TopK | Maximum number of members contributing at least the"Minimum percentage for TopK" to a given measure (total sum). By default, a maximum of 5 members must contribute at least 40% of a given measure to be top contributors. |

| Percentage K for TopK | Percentage of the number of members used to determine K. By default, K is equal to 33%. A minimum of 6 members is required to obtain a Top2. |

| Minimum percentage for TopK | Minimum percentage of a given measure to which the K best members must contribute to be Top contributors. By default, the K best members must contribute at least 40% to a given measure (total sum) to be Top contributors. |

| Lowest lower bound for correlation | Minimum correlation coefficient for a dimension with 10 members for correlation to be taken into account. By default, the minimum correlation coefficient is 0.7. |

| Highest lower bound for correlation | Minimum correlation coefficient for a dimension with 50 or more members for correlation to be taken into account. By default, the minimum correlation coefficient is 0.3. |

| Limit for correlation | Limit of the correlation coefficient beyond which the relationship is no longer considered a correlation. |

Agent

This section allows you to configure the server parameters for the use of DigDash Agent. See paragraph Configuring DigDash server settings for a detailed description.